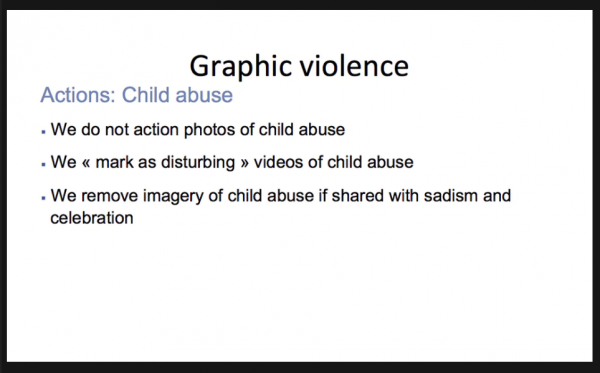

An investigation into Facebook's moderation guidelines has revealed that videos and images of non-sexual child abuse shared on the network are marked 'disturbing' but not removed.

The Guardian uncovered the secret rules and guidelines the social media site's employees have to follow when moderating content.

Employees are given over 100 training manuals, spreadsheets and flowcharts which provide a labyrinth of rules for moderators. Employees say they are overwhelmed by the volume of work, which means they often have 'just 10 seconds' to make a decision.

The website outlines a number of instances where content could be labelled as child abuse. The official rules include:

- Videos of a minor repeatedly kicked, beaten, slapped by adult

- Videos of biting through skin/burn/cut wounds inflicted on minor by adults

- Video of poisoning/strangling/suffocating/drowning inflicted on minor by adult

- Videos of repeated tossing, rotating or shaking of toddler by adult

- Toddlers smoking tobacco or marijuana where toddler is in the process of smoking or is encouraged to smoke by adult

Despite this, Facebook’s policies on non-sexual child abuse show that it is trying to be open, while also banning horrific images. But is it working? Moderators remove content ‘upon report only’, meaning graphic content could be seen by an endless number of people before it is flagged. Facebook says publishing certain images can help children to be rescued.

"We allow 'evidence' of child abuse to be shared on the site to allow for the child to be identified and rescued, but we add protections to shield the audience," it states.

Facebook is also comfortable with imagery showing animal cruelty. Only content that is deemed “extremely upsetting” is to be marked up as disturbing.

And the platform apparently allows users to live stream attempts to self-harm, because it says it “doesn't want to censor or punish people in distress”.

The only time imagery of child abuse is removed is if it is "shared with sadism and celebration".

The National Society for the Prevention of Cruelty to Children (NSPCC) has condemned Facebook's approach, describing them as "alarming".

"This insight into Facebook's rules on moderating content is alarming, to say the least," a spokesperson for the charity said.

Mark Zuckerberg recently announced Facebook would be hiring an extra 3,000 moderators in the wake of controversy over Facebook Live videos showing extreme violence and upsetting images, including a man that self-immolated, and a man that killed his daughter and then himself.

The NSPCC said, "It needs to do more than hire an extra 3,000 moderators. Facebook, and other social media companies, need to be independently regulated and fined when they fail to keep children safe."